Personal service robots have to be able to identify a specific person to whom services are provided. We have been developing specific person detection and tracking method using multiple visual and sensory features. Features used are visual features (face, color, gradient, intensity pattern, etc), shape features (body shape, height, etc), and motion features (gait). We apply on-line boosting to adaptive feature selection.

[References]

- K. Koide and J. Miura, "Identification of a Specific Person using Color, Height, and Gait Features for a Person Following Robot", Robotics and Autonomous Systems, 2016.

- K. Koide and J. Miura, "Convolutional Channel Features-based Person Identification for Person Following Robots", 15th International Conference on Intelligent Autonomous Systems, 2018.

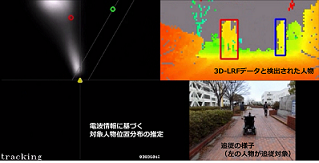

We have developed a robot that can robustly follow a specific person in outdoor. The robot uses two ESPAR antennas (developed by Prof. Ohira of TUT) to localize the person holding a radio transmitter and a 3D LIDAR for person and obstacle detection. Thanks to the ESPAR antenna, the system can track the specific person even if he/she is out of view of the LIDAR. In addition, this system can be used at nighttime.

[References]

- K. Misu and J. Miura, "Specific Person Tracking using 3D LIDAR and ESPAR Antenna for Mobile Service Robots", Advanced Robotics, Vol. 29, No. 22, pp. 1483-1495, 2015.

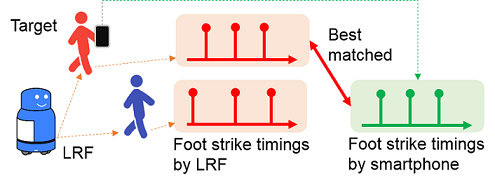

Visual person identification could sometimes be difficult due to illumination changes and long-term occlusions. We have developed a method of localizing a specific person very robustly. The method uses foot strike timing information measured by a smartphone of the person. It also measure such timing information of all existing persons with 2D LIDARs on the robot. By matching the two kinds of information, the robot can localize the person even if a long-term occlusion.

[References]

- K. Koide and J. Miura, "Person Identification Based on the Matching of Foot Strike Timings Obtained by LRFs and Smartphone", IEEE/RSJ Int. Conf. on Intelligent Robots and Systems (IROS), Daejeon, Korea, 2016.

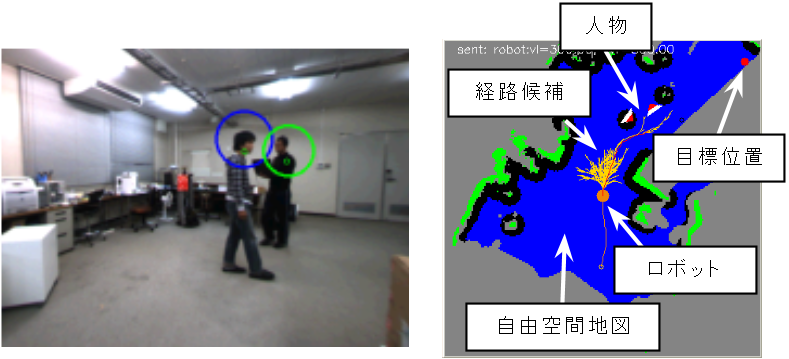

Path planning is necessary for a mobile robot to move safely and efficiently towards the destination while avoiding obstacles. To realize appropriate responses to the dynamics of the surrounding environment (e.g., walking persons), path planning must be performed in real-time. We propose the arrival-time field, which will be used for guiding the expansion of an RRT (Rapidly-exploring Random Tree) variant towards promising regions so that both the efficiency and the safety are achieved.

[References]

- I. Ardiyanto and J. Miura, "Real-time Navigation using Randomized Kinodynamic Planning with Arrival Time Field", Robotics and Autonomous Systems, Vol. 60, No. 12, pp. 1579-1591, 2012.

- Igi Ardiyanto, Jun Miura, "3D Time-space Path Planning Algorithm in Dynamic Environment Utilizing Arrival Time Field and Heuristically Randomized Tree", 2012 IEEE International conference on Robotics and Automation(ICRA), 2012.

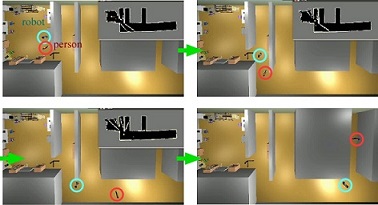

An attendant robot has to choose an appropriate position with respect to the target person depending on the person's state. We have developed a viewpoint planning method by which the robot watches the target person from a distant position in the case, for example, where he/she is talking with others or walking around in a museum, without always being close to him/her. Such a behavior is generated by minimizing the robot movement as long as it does not lose the sight of the target.

[References]

- I. Ardiyanto and J. Miura, "Visibility-based Viewpoint Planning for Guard Robot using Skeletonization and Geodesic Motion Model", Proc. 2013 IEEE Int. Conf. on Robotics and Automation, pp. 652-658, Karlsruhe, Germany, May 2013. (Best Service Robotics Paper Award Finalist).

- I. Ardiyanto and J. Miura, "Partial Least Squares-based Human Upper Body Orientation Estimation with Combined Detection and Tracking", Image and Vision Computing, Vol. 32, No. 11, pp. 904-915, 2014.

- I. Ardiyanto and J. Miura , "Human Motion Prediction Considering Environmental Context", Proc. 2015 IAPR Int. Conf. on Machine Vision Applications, pp. 390-393, Tokyo, Japan, May 2015.

Face is a strong feature for person detection and identification. Face images are, however, degraded in bad illumination conditions such as backlighting. We have developed an illumination normalization method that coverts faces in any illumination conditions to fairly consistent appearance, thereby making models for face detection and identification be very robust. This conversion is done by a GA-optimized fuzzy inference and runs in real-time.

[References]

- B.S.B. Dewantara and J. Miura, "OptiFuzz: A Robust Illumination Invariant Face Recognition System and Its Implementation" Machine Vision and Applications, 2016.

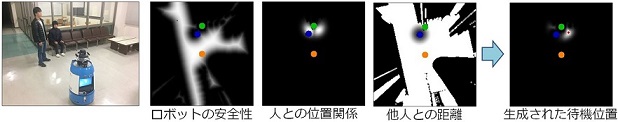

We have been developing methods for adaptive attending that choose appropriate behaviors according to the target person's state. We have developed a method of classifying the user's state to walking and sitting, then calculating appropriate positioning based on personal and social distances as well as collision check with obstacles.

[References]

- S. Oishi, Y. Kohari, J. Miura, "Toward a Robotic Attendant Adaptively Bahaving according to Human State", Proc. 2016 IEEE Int. Symp. on Robot and Human Interactive Communication (RO-MAN 2016), New York, U.S.A, Aug. 2016(to appear).